DataStage v8.5 introduced an optional add-on: balanced optimizer. The optimizer can redesign parallel jobs to improve performance. It applies the following principles in the redesign.

Minimize I/O and data movement

Reduce the amount of source data read by the job by performing computations within the source database. Where possible, move processing of data to the database and avoid extracting data just to process it and write it back to the same database.

Maximize optimization within source or target databases

Make use of the highly developed optimizations that databases achieve by using local indexes, statistics, and other specialized features.

Maximize parallelism

Take advantage of default InfoSphere DataStage behavior when reading and writing databases: use parallel interfaces and pipe the data through the job, so that data flows from source to target without being written to intermediate destinations.

The key takeaway is to do as much as possible within databases. It seems to me that an experienced DataStage developer could do a much better job in applying these principles to optimize a job than a piece of software. So let me give it a try on a real-world production job.

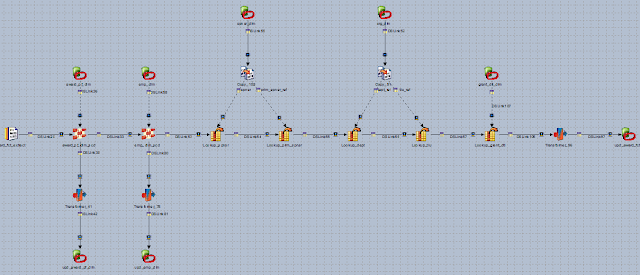

The original design is shown in Fig. 1. All data processing and transformation are performed within DataStage server, including,

- two slowly changing dimension stages,

- five lookup stages, and

- three transformer stages for adding timestamp columns.

Eight Oracle connector stages serve as data sources/targets only, reading 5 source tables and writing 3 target tables within the same database. No other tasks are performed within the connectors. This type of job is an ideal candidate for balanced optimization.

Figure 1: Original job design

The optimized job is shown in Fig. 2. A single Oracle connector stage replaces all 20 processing and data stages. The main data stream is bulk-loaded into a temp table (Fig. 3), and processed in after-SQL.

Figure 2: Redesigned job applying the principles of Balanced Optimization

Figure 3: Oracle connector configuration in the optimized job

Here are the after-SQL statements.

The performance gain is dramatic, dropping from ~30 seconds all the way down to ~3 seconds. In addition, I get two extra bonuses for free.

- The original job is not transactional. If it failed in the middle, the target tables might be in inconsistent states with partial results. In order to recover from the job failure, I need to do some cleanup. The optimized job, on the other hand, is transactional. The update to the target tables is carried out in the after-SQL statements. If they fail, the target tables are automatically rolled back.

- If the job fails because of data quality issues, such as lookup failure or unique constraint violation, it is not easy to find the offending rows in the original design. In the optimized design, all intermediate results are kept in the temp table within the same database as the target tables. It is fairly straightforward to pinpoint the offending rows by joining the temp and the target tables.

In conclusion, balanced optimization (BO) can greatly improve job performance, as well as simplify maintenance. However, it is not necessary to pay the additional license fee if the BO principles can been applied in job design by developers.

No comments:

Post a Comment